Looking Beyond 400G – A System Vendor Perspective

TEF21 Keynote – Rakesh Chopra, Cisco

Solving the Power Challenge

TEF21 kicked off with a keynote presentation from Cisco Fellow Rakesh Chopra with his view on what happens after 400G from a system vendor perspective. During his presentation, Rakesh addressed system architectures today, what’s next as we think about doubling the bandwidth again, and how to manage the endlessly increasing power required by systems and networks.

According to Rakesh, power is everything, limiting what can be built, what customers can deploy and what our planet can sustain. He asserted that the industry should adopt a “power first” design and deployment methodology – a big change as an industry – and offers a way forward. Rakesh outlined an architectural approach to solving power that will include co-packaged optics and explored options for building data centers with a power-first methodology. He closed with an important call to action: we are at an inflection point in the industry where the pace of bandwidth growth and innovation isn’t slowing down, and power is growing at an unsustainable rate. Therefore, it is a moral imperative that the industry solve this power challenge, ultimately driving a new set of innovations to bring these next-gen technologies to the industry.

After his presentation, the audience engaged Rakesh with thought provoking questions. His insightful responses are captured below, offering needed perspective into the pressing issue of how the Ethernet ecosystem can and should handle power challenges.

TEF 2021 Keynote Q&A with Rakesh Chopra

Peter Jones: I’ve got a couple of remarks and a couple of questions. Morality in technology is an interesting thought, and I think it’s good for us to remem ber the impact that we have on the rest of the world. How did you come to this conversation of focusing morality, as opposed to technology?

ber the impact that we have on the rest of the world. How did you come to this conversation of focusing morality, as opposed to technology?

Rakesh Chopra: I think it’s actually not just focusing on morality. It’s a technology, a business, and a moral imperative. Because power fundamentally limits the type of systems that we can build, we have to solve it from a technology perspective as an equipment vendor because it’s limiting what our customers can actually deploy in their data centers. Our customers are out of power in their facilities. Every watt we consume in networking means that they can’t consume a watt in servers. So, we’ve got to get to the point where we are sort of really doubling down on driving that power down. I think the morality piece is a fascinating new piece of that puzzle. At the end of the day, and this might be my personal opinion here, we only have one planet to live on, and because the things that we work on today are so prevalent in the industry, I think it actually gives us a great lever, as an industry, to try and change that trajectory. I think the other piece is, because power costs our customers so much money, and some of our customers are sort of adopting green initiatives themselves, there’s a double whammy for them. Not only is it expensive for them to deploy, but they have to offset that carbon somewhere else. So, again, I think this whole notion of morality is starting to filter into the discussion unlike before. Because you’ve got all three of those things going together, I just think it’s a fascinating time to be an engineer.

Peter Jones: If power per bit doesn’t improve, what do you do next? I think what you said is, that it has to improve.

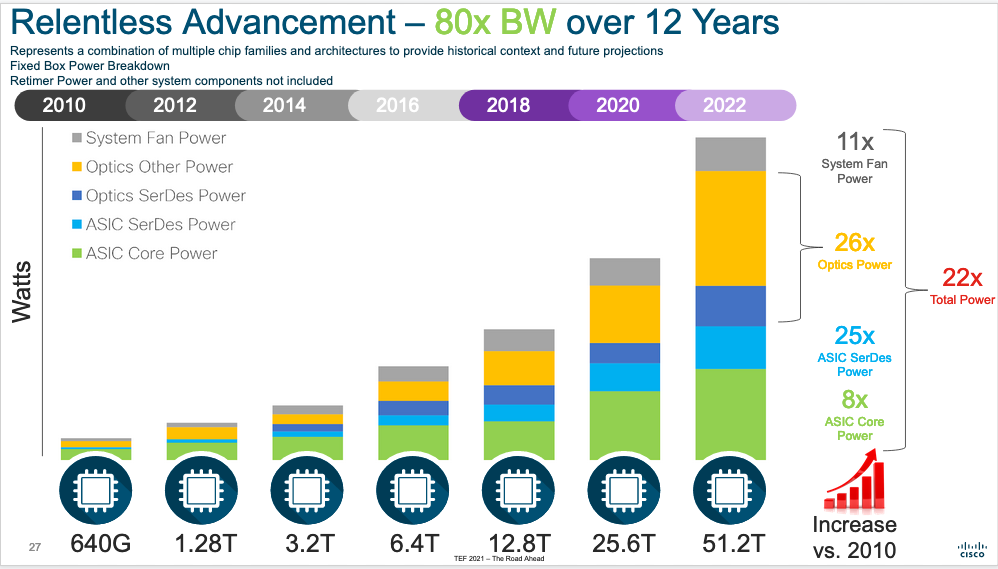

Rakesh Chopra: I think that’s right. To be honest, power per bit (per second) has actually always been improving. So, if you look at the data, it’s not that the power per bit has not gotten better over time. In the end, we’ve increased the bandwidth by 80 times, but we’ve only increased the power by 22 times. So our power efficiency is getting better. The power per bit actually has done a pretty good job of improving. My contention is that it’s just not enough. We actually have to change the slope. We are doubling our bandwidth with a very small power increase. We’ve got to grapple with this endless power increase, generation after generation, and really take that as the high order bit for when we make tradeoffs. Because we all know that, in engineering, everything is a tradeoff. You can’t have one thing without impacting another thing. I think that now power has elevated, in my perspective, to the fundamental thing that we need to address as an industry.

Peter Jones: Digging into that one a little bit more, I think what you’re talking about is the power density of the system and how it limits your ability. We all want to get more stuff in a small place, and the ability to cull those things is becoming not credible. Do you have any impressions on the impact of that from the big data center guys?

Rakesh Chopra: I think there are several aspects to that. It’s a great view that you mentioned there. Data centers used to be limited by the size of the equipment that they would deploy. I think for the last several generations that hasn’t been true anymore. I think the fundamental limitation for data centers is about the power per rack, the power per row, and the power per building. So, the thing which limits it is no longer how small you can crunch your equipment, it’s how efficient is that final equipment. I wouldn’t be surprised if we actually see an expansion of equipment size in order to drive that efficiency vector moving forward. But the flip side is what I commented about the relationship between distance and power efficiency – there is an inherent advantage in cramming things together. So, you’ve got these two effects of wanting to cram things together to make them more power efficient, but also wanting to spread them out to be able to cool them more efficiently, fighting against each other endlessly.

Peter Jones:

If 51.2 terabit traditional system architecture is early for CPO, when do co-package optics go from being interesting to being really needed?

If 51.2 terabit traditional system architecture is early for CPO, when do co-package optics go from being interesting to being really needed?

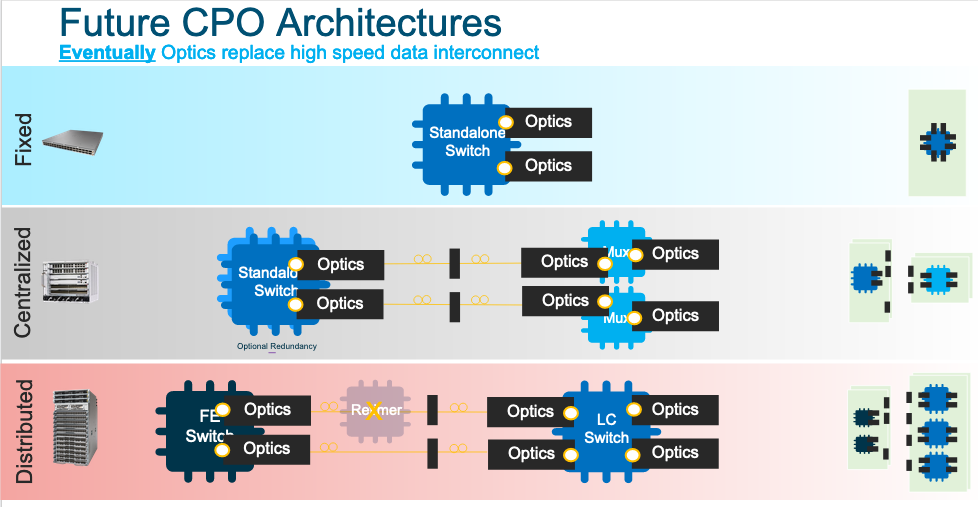

Rakesh Chopra: Great question. It’s clear at this point that building what I would call a traditional system architecture , with 51.2 terabits with standard, pluggable optics, is absolutely possible to do. And I think you will see vendors and customers consuming that style architecture. I think there are two reasons to make co-packaged optics (CPO) happen in that generation. The first is around efficiency. I think if you run through all of the math, at the end of the day, you’ll find that you’ll get a better power per bit for a CPO versus a traditional pluggable optics. But I think the second one is actually more important, which is, I think anybody who claims that building a CPO-based architecture or co-package-based architecture is an easy thing to build, is probably missing the complexities that show up. As an industry we want to make sure that we’re embarking on this transition before it becomes a fundamental requirement, before we’ve driven off the cliff where you can’t build a traditional system architecture. And that complexity is true, both from how equipment manufacturers build equipment, or how ASIC or optics manufacturers build those devices, but also from how the customers deploy it. What does it mean to a data center customer to be able to lose this notion of pluggability from a failure rate, from a management, and from an ecosystem perspective? There are many impacts associated with co-packaged optics that, as an industry, we’ve got to flush through and get right before it’s too late.

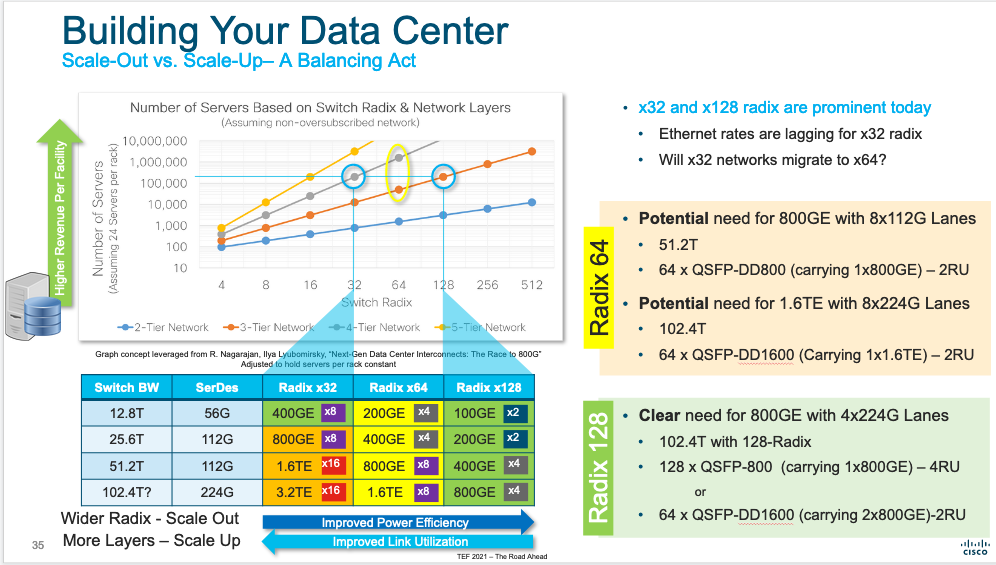

Peter Jones: I think the meta question is, with a combination of reaches and radix and everything else, does today’s traditional design – in terms of how the network is laid out – stay or does that morph? And if so, do we know what that looks like?

Rakesh Chopra: Great question. I hinted a little bit about this with the radix of 32 going to radix of 64. When you double-click on the data, what you really see is the bandwidth growth within these hyper-scaler or wide scale data centers far exceeds what we’re doing from an industry perspective in terms of Ethernet Mac rate adoptions. So when you think about what that really means, it means the radix of these networks is continuing to grow and grow and grow and grow, or you’re going up in topology. Again, I suspect that, because power is the fundamental limiting factor, people will have the tendency to try and scale to the right.

Peter Jones: When you say scale to the right, what do you mean? Do you mean higher radix? More power?

Bakesh Chopra: Higher radix, yes. Scale-out style architecture. So going from a bi-32 to a bi-64 or bi-128, or even up to a bi-512-based radix. I think there’s going to be a tendency to do that, but I think one of the challenges that exists there is if you think about a 51.2 terabit device with a radix of 512 by 100 gig-E, that works okay if your server is attaching into your network or 25-gig servers. But what happens when those servers become 100-gig? Or you have AI-based compute, which is plugging in at N by 400-gig? So, you’ve got this endless push between scaling out and scaling up, and I think it’s something that will be interesting to see how it plays out over time.

You can view Rakesh’s TEF21 presentation, along with all of the TEF21 presentations, on-demand here.