New Applications Driving Higher Bandwidths – Part 2

TEF21 Panel Q&A – Part 2

Nathan Tracy, Ethernet Alliance Board Member and TE Connectivity

Brad Booth, Microsoft

Tad Hofmeister, Google

Rob Stone, Facebook

Addressing Emerging Network Challenges

According to Cisco’s Annual Internet Report (2018-2023), the number of devices and connections are growing faster than the world’s population. Compounded by the meteoric rise of higher resolution video – expected to hit 66 percent by 2023 – along with surging M2M connections, Wi-Fi’s ongoing expansion, and increasing mobility among Internet users, the impact to the network is significant.

Day Two of TEF21: The Road Ahead conference focused on today’s explosive application space as a driver for higher speeds. In New Applications Driving Higher Bandwidths, moderator and Ethernet Alliance Board Member Nathan Tracy of TE Connectivity, and panelists Brad Booth of Microsoft (Paradigm shift in Network Topologies); Facebook ‘s Rob Stone (Co-packaged Optics for Datacenters); and Tad Hofmeister of Google (OIF considerations for beyond 400ZR) discussed how their organizations plan to address emerging network challenges wrought by today’s mounting bandwidth demands.

At the presentation’s conclusion, the audience engaged with panelists on numerous questions about technology developments needed to address escalating speed and bandwidth requirements. Their insightful responses are captured below in part two of a five-part series.

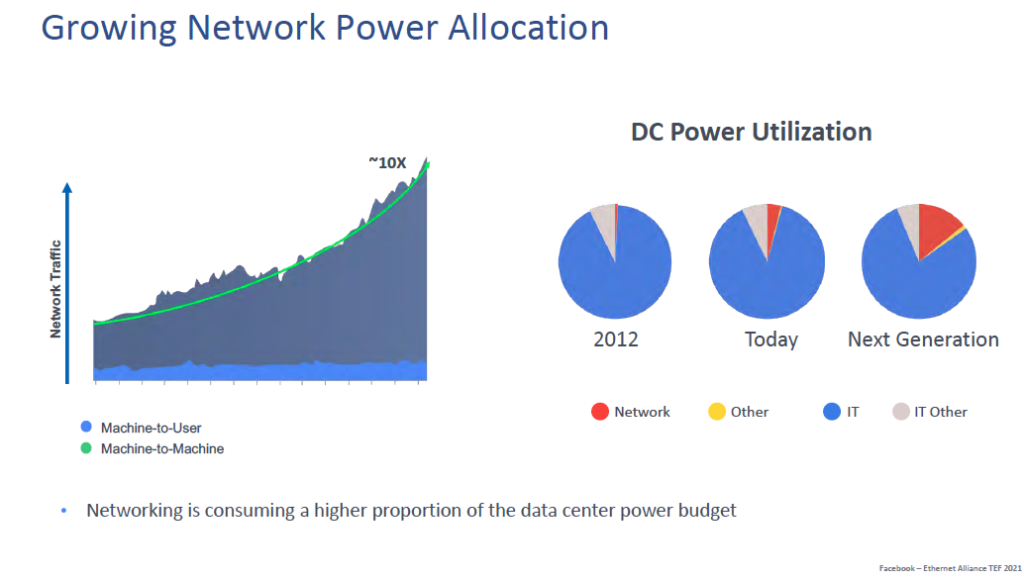

Does Power Matter?

Nathan Tracy: Tad, does power matter at Google?

Tad Hofmeister: Yes, power definitely matters. I don’t want people to walk away thinking Google’s only going to build pluggable transceivers or that we’re not interested in co-packaged optics. It’s really how far out we’re looking. I focused more on shorter term and what OIF is working on as far as getting coherent into this 800 gig. Power is critical. That is one of the motivations for us to adopt an 800 gig switch – or 800 gigabit capacity port. The overall solution is lower power because the watts per gigabit of a fabric built on these larger sized switch chips is lower.

Nathan Tracy: Is this a discussion on power and managing that? Or is this a discussion on next generation bandwidth? Where do we hit the wall first? Is it power? Or is it bandwidth?

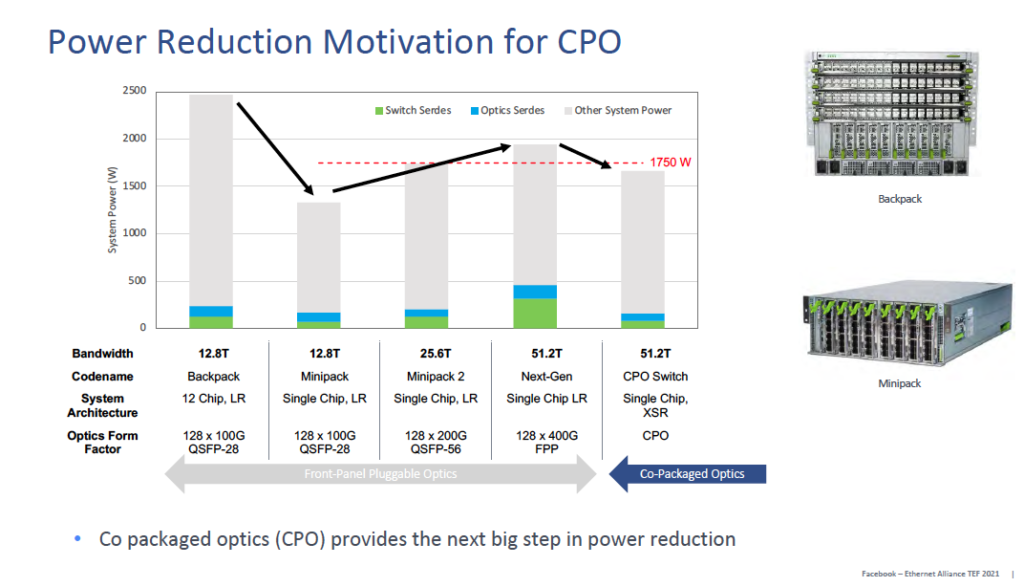

Brad Booth: The key aspect here, Nathan, is implication of power on the bandwidth. If we were to continue using the same technologies we use today, obviously, we’d flatline in our power band. But we’re growing the need to innovate and provide for our customers, at least for Microsoft type of customers which are Fortune 500, and external companies that come to us with requirements for infrastructure as a service. A lot of them are running their workloads on our computers. In some cases we’re even bringing some of their third-party equipment into our data centers or establishing connections to their on-premise third-party equipment. What’s constraining us is that as CPUs and GPUs and other equipment start needing more and more power, one of the things that we look at is power “limitation.” It’s not like we can simply go next door, fire up our power generator and suddenly have more bandwidth. We have to rely a lot on what’s being built out in the world infrastructure and what’s available to us through those infrastructures. A lot of our companies have invested in technologies and industry to provide a cleaner form of power to us. But that doesn’t necessarily mean that we’re limitless in the power. If we had limitless power like a nuclear fusion plant right next to us, some of these constraints would be lifted. But we don’t so we have to operate within that constraint while still trying to grow to maximize the usage of our bandwidth. That’s why you’re seeing industry, and also government like ARPA-E and DARPA, looking at PIPES, and how you get greater bandwidth density with improved energy efficiency. Rob and I, working with our respective companies, we realize one of the ways we’re going to have to proceed is co-packaged optics because switch silicon is what’s really pushing the envelope of bandwidth and energy efficiency density. But that carries over into everything. It’s not going to be just showing up on switches; it’s going to be showing up in GPUs and CPUs and other components. Why am I putting a copper trace on there, driving across a motherboard to talk to something that’s optics if I can embed that optics and save all that power I would have wasted. We’re trying to be more cognizant of the energy that we’re given and how we maximize the bandwidth that we can provide with the energy that we’re provided.

Rob Stone: There are a couple of levers we can pull. Each endpoint requires a certain bandwidth to other endpoints within a cluster of machines. If we can’t deliver the power to support either the endpoints or the network, we’ll just have to put less endpoints within an existing data hall, and that’s not very desirable. Partially populating things is not very efficient so we have to build additional data centers with higher power overhead and cooling infrastructure. There are some things we can do if we run into the wall, as you put it, Nathan. But they’re pretty undesirable and we feel like there is some rationale to using co-packaged optics to help address this power issue.

Rob Stone: There are a couple of levers we can pull. Each endpoint requires a certain bandwidth to other endpoints within a cluster of machines. If we can’t deliver the power to support either the endpoints or the network, we’ll just have to put less endpoints within an existing data hall, and that’s not very desirable. Partially populating things is not very efficient so we have to build additional data centers with higher power overhead and cooling infrastructure. There are some things we can do if we run into the wall, as you put it, Nathan. But they’re pretty undesirable and we feel like there is some rationale to using co-packaged optics to help address this power issue.

Tad Hofmeister: At the building level there’s a power budget, so obviously the less power we consume the better. There’s a cost associated with the power. There’s an environmental impact with the power and the energy required to cool it so there’s incentives to minimize power there, but the biggest challenge from a power point of view is at the chassis level and the optics level. Today just being able to cool the optics is one of the problems in getting the front panel density or the I/O density at the chassis level. I think that’s the biggest challenge. I would love to see some of the anticipated improvements on co-packaged optics because that would really enable the higher capacity.

Nathan Tracy: Increasingly power is a big part of the focus in this networking discussion. Is the first problem we come to one of power supply, or of the cooling that is a result of the power consumption?

Rob Stone: Both the cooling and the power delivery are infrastructure limitations and they were generally designed in concert. There are some things you can do for example. If we partially populated the data center on each rack it would use twice as much power, and then you’d move the constraints to the rack level. So how do you cool the optics, how do you cool the silicon? There are some technologies which could be applied there. We’ve been fortunate to be able to use air cooling but people are starting to use liquid cooling. The TPU cluster publicly announced that it uses liquid cooling. So for these very dense machines you can start to use some newer technologies and then you maybe move the problem from one point to another. I don’t know whether that answers your question.

Brad Booth: That’s one of the aspects of supplying of power that is not that radically difficult. There’s a number of ways to get it there. The biggest problem is creating that high thermal density. Air is a purely inexpensive way to cool but the little fans in the back actually consume a fair amount of power within the data center. People have looked at cold plates – which have obviously their own issues of having to worry about plumbing – closed loop or open loop systems, immersion cooling. People have even talked of doing things like cryogenic tanks. The bigger problem to solve more the cooling aspect of this and decreasing power certainly helps with that cooling equation. But we’re also talking about increasing our speeds and bandwidth requirements so that’s just going to jack up the thermal density on a lot of these components.

Tad Hofmeister: I agree. Cooling is the bigger challenge.

To access TEF21: New Applications Driving Higher Bandwidths on demand, and all of the TEF21 on-demand content, visit the Ethernet Alliance website.