Ethernet in the Age of AI

By John D’Ambrosia, Advisor, Board of Directors, Ethernet Alliance

The computational power required to train the largest AI models continues to grow at a staggering rate. In 2018 OpenAI noted that this processing power had been doubling every 3.4 months since 2012.[i] High performance networking will play an integral part in helping industry satisfy this appetite for processing power.

This represents an exciting opportunity for the Ethernet community.

Within the IEEE the IEEE P802.3dj project is developing the underlying 200Gb/s PAM4 signaling technologies that will address chip-to-chip, chip-to-module, backplane, copper cable, and single-mode fiber technologies to address different specifications for 200GbE, 400GbE, 800GbE, and 1.6TbE. The architecture will also support multiple forward error correction (FEC) architectures, which will be necessary for the different specifications. It should be noted that while the technical decisions have been made, the Task Force has just begun the task of writing the actual standard, which is anticipated will continue through 2026.

At the same time, the Ultra Ethernet Consortium (UEC) will focus on delivering a complete cost-effective Ethernet architecture optimized and scalable for high performance AI. To facilitate its mission for an integrated solution, it has formed eight working groups: Physical Layer, Link Layer, Transport Layer, Software Layer, Storage, Management, Compliance & Test, and Performance & Debug.

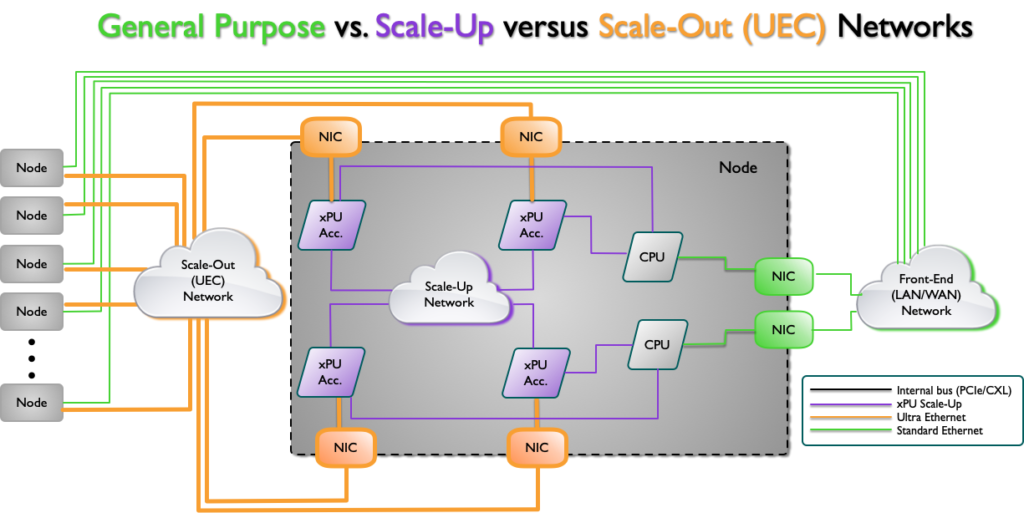

It is important to understand the role of these two complementary efforts and their interaction to support AI workloads. The diagram below illustrates one type of an AI architecture topology where there are several different networks, each serving a different function. On the front-end are the traditional Ethernet network connections, while on the backend you have a scale-out (UEC) network. There is also a scale-up network interconnecting the CPUs and xPU accelerators. It is important to note that each of these networks is unique with different requirements and constraints.

Source: Ultra Ethernet Consortium

The growth in required computational power for AI, however, has the industry starting to look beyond the current Ethernet development efforts underway in IEEE 802.3 and the UEC.

Perhaps the most obvious area for exploration would be the traditional effective doubling of the signaling rate of the underlying electrical and optical data streams. While obvious, the reality is that this is a multi-dimensional physical layer problem, which could also impact the AI Network Stack.

It’s not a simple problem of selecting a modulation scheme. The different Ethernet networks (i.e. front and back) should be examined independently to determine the market timing, performance needs (including latency requirements), target reaches, and overall constraints of each. Perhaps there is some commonality, but this should not be immediately assumed. Given that these effects are cumulative (issues compound as scale increases, for instance), particular care should be taken to ensure a holistic approach. These discussions may also include examination of developments in copper interconnect, optical interconnect, and packaging technologies.

As the Ethernet community addresses its future with AI, such exploratory discussions will garner the interest of the entire industry. Once again, recognizing AI’s continuous appetite for computational power, this conversation needs to start.

To that end, the Ethernet Alliance will host a 2-day Technology Exploration Forum on October 22 and 23, 2024 at the Santa Clara Convention Center. It will focus on “Ethernet in the Age of AI.”

This forum will bring together experts and key players from different organizations, such as TEF Associate sponsors Ultra Ethernet Consortium (UEC), Open Compute Platform (OCP), and the Optical Internetworking Forum (OIF). In addition to its own broad membership, this event is open to non-Ethernet Alliance members. Together, these two constituencies will provide a diverse set of backgrounds, ensuring lively discussion and debate.

[i] OpenAIblog post ‘AI and Compute’ addendum ‘Compute used in older headline results’ posted 7th November 2019 by Girish Sastry, Jack Clark, Greg Brockman and Ilya Sutskever <https://openai.com/blog/ai-and-compute/>.