Test & Measurement: Planning for Performance: TEF 2021 Panel Q&A – Part 1

David Rodgers, Ethernet Alliance Events Chair and Teledyne LeCroy

Francois Robitaille, EXFO

John Calvin, Keysight Technologies

Steve Rumsby, Spirent

Market Drivers Impacting Test & Measurement

The final day of the Ethernet Alliance’s TEF21: The Road Ahead forum was punctuated by a lively discussion on performance planning in test and measurement.

In Test & Measurement: Planning for Performance panel discussion, moderator and Ethernet Alliance Events Chair David Rodgers of Teledyne LeCroy, along with panelists John Calvin of Keysight Technologies (Validating Methods for Emerging 106Gbps Electrical and Optical Specifications relating to IEEE P802.3cu/P802.3ck); Steve Rumsby, Spirent (Recommended Design Practices for the Next Generation Ethernet Rate); and Francois Robitaille, EXFO (Full Compliance Validation of Next-Gen Transceivers) explored the diverse market drivers influencing test and measurement.

The panel emphasized critical impacts, from increased signaling speeds, to growing port counts with higher density, to standards and interoperability conformance. Discussions of the current state of play, and how test and measurement must evolve to keep pace with market and technology demands attracted strong audience interest and reaction.

At the presentation’s conclusion, panelists answered numerous questions about the test and measurement developments needed to address escalating speed and bandwidth requirements of the next era of Ethernet. Their insightful responses are captured below in the first of this two-part series.

At the presentation’s conclusion, panelists answered numerous questions about the test and measurement developments needed to address escalating speed and bandwidth requirements of the next era of Ethernet. Their insightful responses are captured below in the first of this two-part series.

The Future of Test Tools: Keeping Pace with Technology

David J. Rodgers: What kind of data do we need to collect, and how that’s different from yesterday to today?

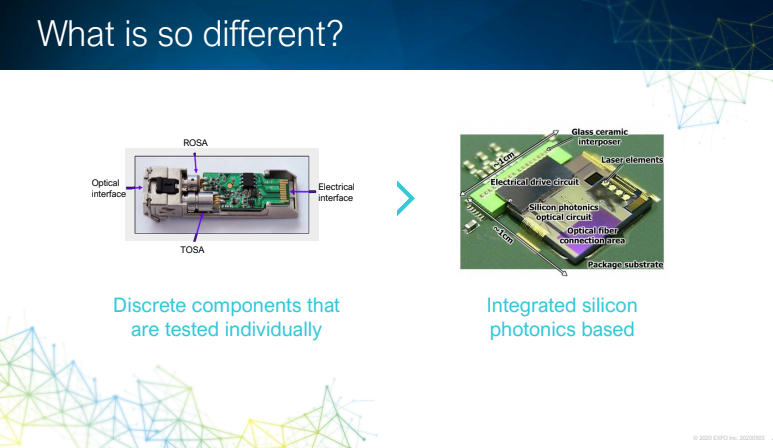

Francois Robitaille: I wouldn’t say data is fundamentally different. If you test the transceiver, you’re still going to need to test optical output power, your wavelength, central wavelength, and calibration data. It’s almost more about what’s surrounding the data. When packaging the die, at the CM, for example, you’re going to measure typically insertion loss when you put a coupling device. But what was the temperature at the coupling when you did the curing? How long did you do the curing? All those little accessory data points that you may not have thought of collecting to do root cause analysis; for example, am I seeing this condition because it didn’t cure long enough? I’ve seen that before where we question “what was the root cause of that?” We need to have all the environment information in which the test was performed, not only the test data itself. That’s one thing we’re finding today. If you look today, pretty much every vendor is the same. You finish a component, what do you do? You keep your pass/fail data, your birth certificate, you store data on a network somewhere, and unless you have an issue, you’re not going to look at it again. But now with this integration, you need to have all those parameters surrounding your process to be collected as well, if you want to be able to correlate the data between diverse stages. I would say that’s probably the biggest difference.

David J. Rodgers: How are physical layer test practices evolving to meet the technological challenges of the next Ethernet reign?

John Calvin: There are a lot of different things that are holding the industry back, The really the big roadblock now is the packaging, it’s not instrumentation on the other side of it. People are going through all sorts of back flips to push a 53 gigabit stream out through a 40 gigahertz spigot, which is really about as good as it gets out of packaging today. So, we’re going to see the weak link going away. We’re going to see people using XSR and similar short reach, very high speed and BUS architectures that are just millimeters long on organic substrates. And that’s going to lead to a whole different set of testing problems from instrumentation, particularly with regards to signal access. Basic things like, will you go to a PAM-5 or a PAM-6 signal, is very real on deck right now, because again, you can’t really go fast. And then there’s a clocker curve that comes with 96 gigabaud PAM-5. How are we going to get a clocker curve to work on that? These are challenges that keep us up at night. That’s a quick rundown, of some of the ways that test and measurement (T&M) and test instrumentation will have to evolve in the next five years.

David J. Rodgers: What are the changes needed for the signal and protocol traffic generation suppliers to keep pace with the technology advances?

Steve Rumsby: Make sure you’re doing the right things to qualify your PHY, continue to monitor your PHY. Don’t take the PHY for granted. If you can’t measure it, you can’t affect it. That’s T&M 101. I think measurement of these signals from an observability perspective, just goes from hard to almost impossible, in terms of monitoring live signals. Partnering with your device component suppliers, gearboxes for example, and making sure you’ve got good, complete access to that data. And again, you’re using that data the right way as enablement to get observability, at least from that intermediate point.

David J. Rodgers: You touched on something that I don’t think we’ve addressed enough, and that is the complexities the market faced moving from 10G to 25G. Now we’ve added even more difficulty in PAM-4 50G and of course, speed begets complexity. Speed begets problems. But one thing we’re starting to see is the requirement of Network Equipment Manufacturers (NEMs) to create these relationships with us, the test and measurement community. It’s not like buying a hard drive or buying a piece of flash storage. You must establish relationships and partnerships with test and measurement companies. The evolution is not just going out and buying a scope from a scope vendor but sitting down and addressing with that test vendor what your goals are. We help address the challenges the technology is presenting today in the way of roadmaps. The industry is on the way implementing the roadmap and needs to create partnerships with test and measurement companies. Is that how you see it, Francois, as well?

Francois Robitaille: Yes, definitely. I think it’s becoming more and more important because you have different vendors or suppliers you want in your food chain. You have the foundry, the CM, and ultimately you need the transceiver manufacturer or transceiver user. You need to have some coherence along the process to be able to identify where the issues are coming from. If you don’t partner with the test vendors there may be a disconnect somewhere in the process, which will prevent you from getting valid information.

To access TEF21: Test & Measurement: Planning for Performance on demand, and all of the TEF21 on-demand content, visit the Ethernet Alliance website.