Technical Feasibility: Next Generation Optical Interfaces – TEF21 Panel Q&A – Part 2

Mark Nowell, Ethernet Alliance advisory board chair and Cisco

Scott Schube, Intel

Matt Traverso, Cisco Systems

David Lewis, Lumentum

Xinyuan Wang, Huawei

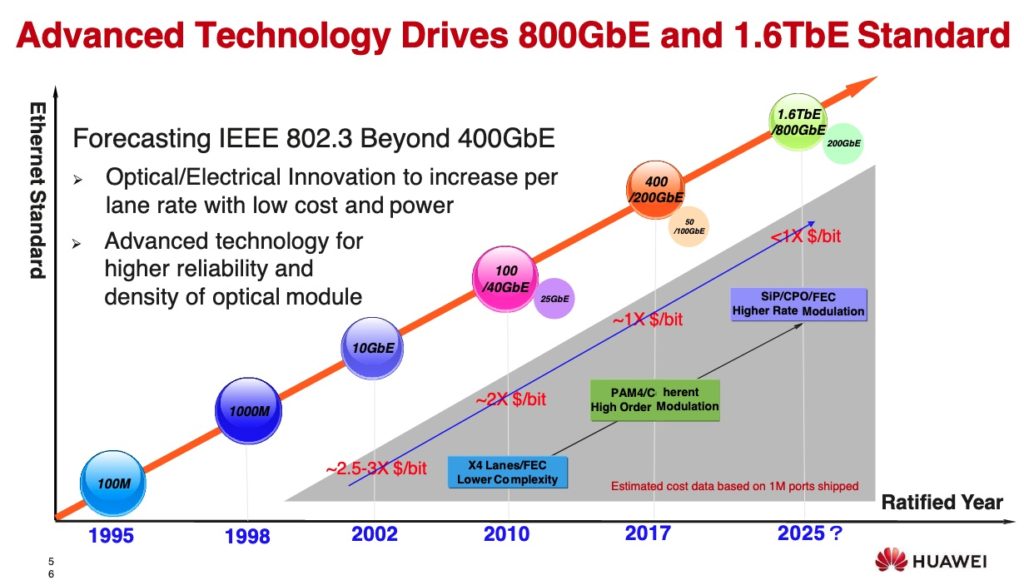

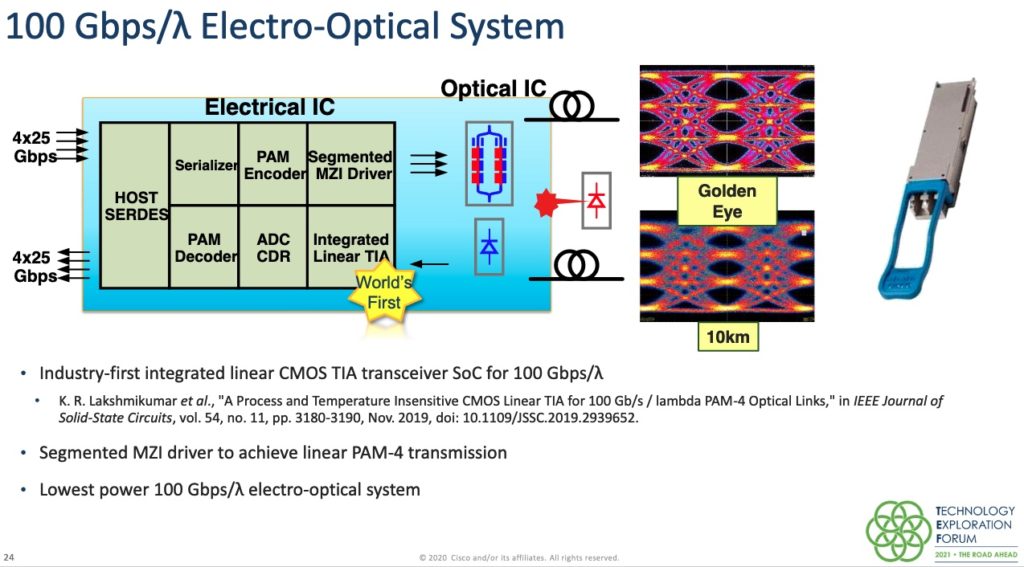

The optical technology industry is heading into a time of transition. As 100G per lambda technology matures and is established as a key building block for networks and the question becomes, what are the next steps for the industry to consider? Day four of TEF21: The Road Ahead conference focused on new directions for next-gen optical interfaces in the session Technical Feasibility: Next-Generation Optical Interfaces. Moderator and Ethernet Alliance Board Advisory Chair Mark Nowell, Cisco, and panelists David Lewis of Lumentum (Performance Photonics Enabling Next Generation Interfaces); Scott Schube of Intel (Scaling Bandwidth with Optical Integration); Matt Traverso, Cisco (Scaling Architecture for Next Gen Optical Links); and Huawei’s Xinyuan Wang (Observations on the Rate of Beyond 400GbE, 800GbE and/or 1.6TbE) shared their views on those transitional next steps including speeding up the building blocks to support the next rates, understanding how to re-package technologies to enable co-packaged optics and understanding the trade-offs between direct detect and coherent technologies.

Afterwards the audience asked panelists numerous questions about the future of optical interfaces. Their insightful responses are captured below in part one of a two-part series.

Optical priorities

Optical priorities

Mark Nowell: Looking at the Beyond 400G project that’s kicking off in the IEEE, from your perspective, what do you consider to be the low hanging fruit of what that group can define? What is a path to taking something to higher volume from an optical perspective if you’re looking at, say, 800G?

Dave Lewis: I would say that you need a PCS defined before we do anything in terms of PMDs. You need to know what the lanes look like and how many of them there are to transport the 800G. That is a thorny area because it gets straight into a FEC discussion and the FEC folks want to know what error rate you’re going to deliver from the interconnect between the PCSs, so we go around in that circle again.

Scott Schube: Focusing more on the physical lane side, doing an 800G E version of 100G per lane interfaces is relatively low effort and to the point where for a parallel 8 X 100G, to some extent you don’t need any more optical work. That already exists, although it’s a 2 X 400G now. There are other ones. If you wanted to do let’s say an 8 X 100G WDM on duplex fiber, that’s some more work but less than would be for a 200G/lane optic, which ultimately of course the working group would want to address 200G per lane versions.

Mark Nowell: Clearly the step to 200G optical is not without its challenges. What do you see in terms of cost power or timeline between something like an 8 X 100 solution, whether it’s parallel or duplex, versus a 4 X 200 parallel or duplex? Is there any comparison point that can be brought between those two in terms of time, cost, power? Or is too early?

Scott Schube: I guess we could say directionally the timing is interwoven with the cost, at least from my point of view. For instance, if you’re talking about 800G, a 4 channel 4 X 200G, eventually the cost floor will be lower. But if you were deploying something this year, the cost for a 400 X 200G is effectively infinite because it doesn’t exist, right? So, from my point of view, if you wanted to deploy something in the next 2-3 years, 8 X 100G is going to be your best bet in terms of just the general feasibility and cost. But then as you go out in time and when that time is, I don’t know when the crossover point will be. It depends on how well we all, in our respective companies, execute on the technology. At some point 200G per lane will be the right way to go.

Dave Lewis: I think there’s an expectation in the industry that if you were to say 8 X 100 is the next generation and 4 X 100 is what we have today, that the 8 X 100 would be on a path to cost parity at least with the 400G solution. Then cost per bit starts out at 50 percent of what it was. Obviously, having to develop a whole load of new devices, do all the experiments, do the transmission measurements at a new speed like 200G X 4, is a much bigger project and would eventually lead to the lower cost per bit, lower power per bit goal. The question is how quickly do you need it?

Key Considerations for Co-Packaged Optics (CPOs)

Mark Nowell: Regarding co-packaged optics , there are three potentials for the light sources at a system level. You could have an external light source. You could have some kind of redundant integration. Or you could have just simple single laser integration. There’s pros and cons to all of these. I’d like to understand what the direction is and what you think the challenges may be around reliability or flexibility.

Matt Traverso: I think the external light source has some advantage in terms of fungibility, where we can use different devices. That is of course predicated on having a broadband coupler. Having something that can work over the entire band is really critical, that has good coupling. Also, it then allows you to have different wavelengths and different schemes depending on the optical power required for that particular channel. But that again goes back to a photon is a terrible thing to waste. It’s’ really precious; but having a platform like that is really important.

Scott Schube: I think that it’s highly likely that multiple approaches will co-exist in initial deployment and maybe even ongoing deployment. Similar in some ways to how the different approaches exist and different technologies exist within the current systems. I think there is room for that kind of experimentation so remote lasers, the integrated laser, integrated laser with redundancy are all potential options. And I think they will probably co-exist. The tradeoffs are such that, with an integrated laser, it has the promise of lower cost. You lose less photons in terms of coupling in from an external light source. However, an external light source would have two potential advantages. It could be placed in a less hot environment inside the platform is number one. And then if it could be worked out properly, you could potentially have a model where you can replace that external laser source. I think those are the tradeoffs that when we talk to customers they seem to be working through. The right answer is to be determined. We’re obviously big believers in the integrated laser as the way to reduce costs and the right way to go, but there’s no one right answer I would say.

Dave Lewis: There’s a project started in OIF on co-packaging which we’ve contributed to, basically just pointing out that we need to agree on some specs. The message we’re getting back is that cost per watt is the most important parameter for many of the applications to enable them. If you look at a switch, how many total watts of optical power do you need from your external light sources? Then, how many of those switches are there and add them all up and suddenly you’re in the power station business generating optical power. Cost per watt is important and the only way that cost can get to where it needs to get to is if everybody doesn’t have a different set of requirements in terms of wavelength and power, whether it’s cooled or uncooled, and so on. The current thinking is that the low hanging fruit is CWDM4. There’s four wavelengths and you need lots of it. So those four lasers, how low can they go and will you meet the needs of everybody with one product or not. It’s currently more of a custom business. People are saying, “Well, here’s what we want. These wavelengths. This amount of power. And we’re going to split it this way,” et cetera. So, you’re doing a custom design and for us, that’s not a business we are used to. But generating lots of optical power at low cost is where it needs to get to. I think the industry has to make some decisions on what specs are needed and what wavelengths are needed and what the architecture looks like for these CPO systems.

Mark Nowell: Clearly, the transition to CPO is going to be a fairly significant investment for potential suppliers of CPO devices, components and systems. Do you have a sense of what kind of volume you need to see and what kind of adoption you need to see to make that CPO ROI reasonable?

Dave Lewis: In the laser business, which I described is what we do, we supply chips, we don’t put them in modules anymore; in most cases, we don’t even put them on carriers except for experimental and evaluation purposes. We’re selling laser die, and to make a business out of it, you need to be selling millions of the same die and millions per year is what I would say. That’s foreseeable if you count up how many ports of 400G there are going to be. Then there’s definitely the kind of volume of business out there. That’s the sort of volume to make it reasonable return on investment.

Matt Traverso: I think the other thing, and the reason I keep harping on the SerDes, is that there’s this fallacy out there that the optical comes first. Really, the electrical has to come first because you need some serializer to get that working. So what’s happened historically is we’ve had some optical PHY, like some gearbox or something else, to aggregate those different lines together and it has to have the most cutting edge serializer and deserializer. And that’s really what’s driven the cost to the next rate historically. So I think that’s why I think all of us are highlighting that aligning to the line rate on the electrical side is important so we can leverage that because this will be a major undertaking to make CPO happen.