Market Need – The next Rate: TEF21 Panel Q&A – Part 2

Peter Jones, Ethernet Alliance Chair and Cisco

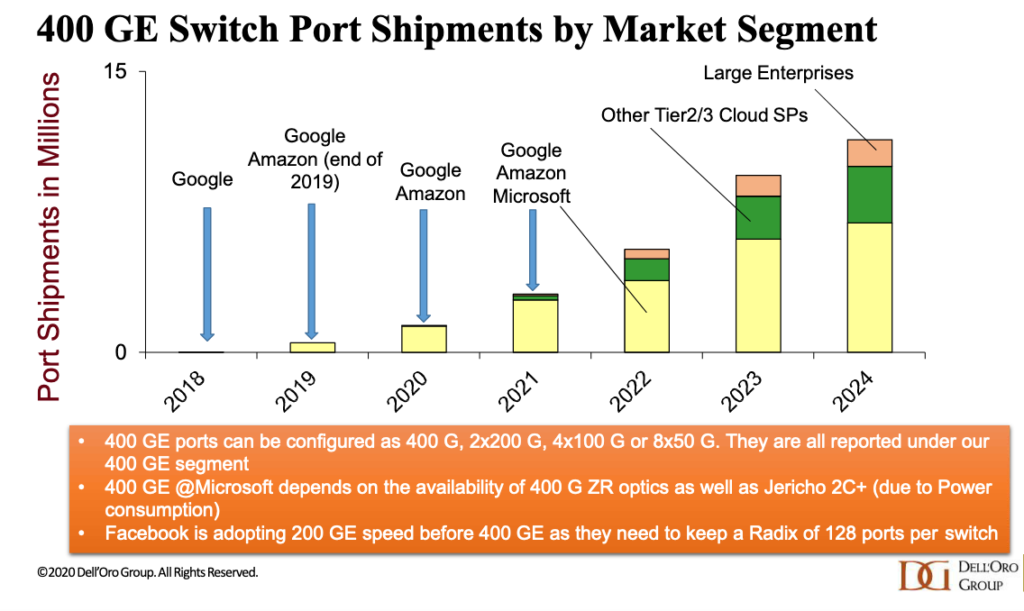

Sameh Boujelbene, Dell’Oro

David Ofelt, Juniper Networks

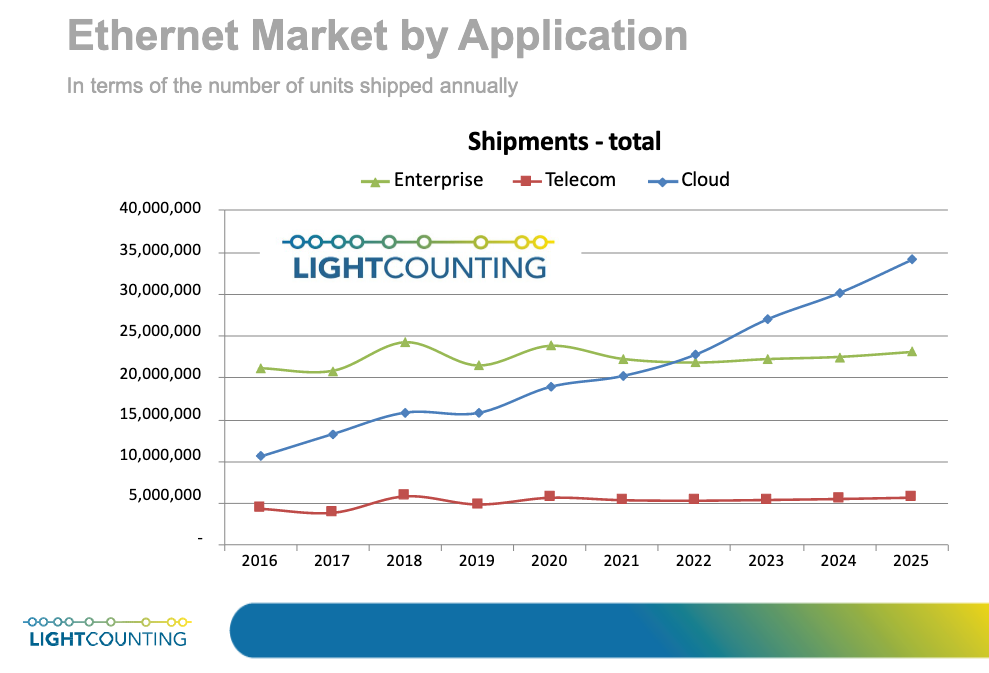

Vlad Kozlov, LightCounting

Market Drivers for Next-Gen Ethernet

On the first day of the Ethernet Alliance’s TEF21: The Road Ahead, we heard from two industry analysts and a long-time member of the Ethernet industry who discussed the market needs driving the push to higher bandwidths. In Market Need – the Next Rate, moderator Peter Jones, Ethernet Alliance chair, along with panelists Sameh Boujelbene, Dell-Oro (Market Drivers for Next Generation Ethernet Speeds) David Ofelt (What Comes after 400GbE) and Vlad Kozlov (Diverging Needs of Enterprise and Cloud Customers) looked at the pace of speed migration by various market segments from enterprise to data center to cloud, and the challenges associated with getting to the next speed. Panelists considered the building blocks that are a necessary part of the upgrade cycle and what factors will drive markets to the next speed.

At the presentation’s conclusion, the audience engaged panelists with several questions about the pace and direction of technology development needed to address escalating speed and bandwidth requirements. Their insightful responses are captured below in part two of a two-part series.

Impact of Network Design on Rate Increases

Peter Jones: There’s been much discussion about co-packaged optics (CPO). When does it really happen, and more interestingly, how does it affect deployment and operation in a massively scalable data center (MSDC) space?

Peter Jones: There’s been much discussion about co-packaged optics (CPO). When does it really happen, and more interestingly, how does it affect deployment and operation in a massively scalable data center (MSDC) space?

David Ofelt: I think it becomes real at different points for different end-users. It depends on what sort of upgrade cycle you’re on – 50G to 100G, 800G or 100G, 400G, 1.6T. And that’s going to change based on what mix of interface types you need, because not all interface types are going to be available on day one. We’ll probably have something simple, like single mode, pretty quickly. But the next generation ZR is going to take a while to become practical in a module with the same density as 400G. I don’t have a good prediction for it, but there’s going to be a reasonable period of time when it trickles out, and it’ll become interesting to each of the users for very different reasons.

Sameh Boujebene: I think it’s very important to keep in mind what issues CPO solves. Basically, it solves thermal and density problems that pluggable optics will inevitably hit when we go to higher speeds. A big portion of people that I interviewed agreed that with 100T chips, that’s when we think CPOs are a must and will become a reality. The ecosystem would also have enough time to be ready by that time. I can see some end-users, some cloud service providers, may have early implementations even with the 51.2T. That will be early adoption, small volume. That’s because some of the hyper-scalers are more power constrained than others, and also by choice they wanted to start early because there is a learning curve. They want to be ready when they have to be ready. Mass adoption is going to be beyond 51.2T, but we may see some early adoption volume at 51.2T.

Peter Jones: There have been some questions about radix and speeds. I understand correctly, it’s going to depend. People will design the networks differently. So, there probably is no perfect answer.

David Ofelt: For any given vendor there is an answer, and they can count on that. And that’s going to be true– end-user to end-user. With any technical inflection that happens, our current crop of big end-users will take advantage of it. They can change their architecture pretty quickly because they own their own stack. Their data center is a building-scale supercomputer that is completely proprietary to first order. What we need to do as an industry is be careful about what they need following the current pattern, but also don’t hold back from finding inflection points and building stuff that is better on some axis. Because to some extent, if you build it, people will implement it.

Sameh Boujebene: I totally agree. It makes perfect sense. And we’ll see different implementation and different choices, yes.

Peter Jones: How do you see the impact of power showing up in the market?

Sameh Boujebene: I’m glad you asked that. It’s already impacting the timeline of some of the hyper-scalers in terms of their ability to move to higher speeds because they are power-constrained. They need to wait for newer technology to work within their existing power budgets. So it is already impacting the timeline of migration to higher speeds at some of the hyper-scalers. Things will get only worse as we move to higher speeds.

Vlad Kozlov: People are already using liquid cooling to keep systems from melting. If you look at the AI clusters, they’re using much more powerful chips to begin with and they are ready to put liquid cooling in. I completely agree that power consumption is important. The other part of the story is a lot of new applications that AI is starting to provide which may reduce power consumption. Is there smart technology to control the power consumption in the city? It makes sense to invest it in a big data center, if they are where all power consumption is going to go down. Again, returning to what I said earlier, I think the AI clusters within data centers are going to propel some new architectures, and we’ll have to see what kind of radix they would like to use. Are they going to use some new optical technologies like optical switching? Very likely so.

David Ofelt: Even if the end-users were able to accept any power we wanted to ship them, at some point we can’t build the box. We can’t get enough power into it, we can’t get enough heat out of it, and it doesn’t scale well. We have boxes that burn more fan power than our old core routers did in total. And that’s true across the industry. So, power is incredibly important, from a building standpoint, and from an end-user, consumability standpoint. I’m supportive of all of the lower power statements.

To access TEF21: Next Generation Electrical Interfaces on demand, and all of the TEF21 on-demand content, visit the Ethernet Alliance website.